For database, users' concerns often differ in different application scenarios, such as: read/write latency, throughput, scalability, reliability, availability, etc.. For some concerns, it can be effectively enhanced by means of application system architecture, hardware devices, etc. But the problem brought is the complex system architecture and the consequent difficulties in operation and maintenance, upgrading, fault location, availability reduction and a series of other problems. Some business scenarios (such as in finance, telecom and other fields) put forward higher requirements on the database, such as reliability (e.g. RPO of 0) and availability (e.g. 99.999%, i.e. the failure time cannot exceed 5.26 minutes throughout the year). In a distributed environment, it will face more problems such as data loss and service unavailability caused by host, network, storage, power, and other factors. These problems are extraordinarily labor-intensive and material-intensive at all stages of business system design, implementation, operation and maintenance, upgrade, etc.

AntDB-M (AntDB in-memory engine) adopts many effective designs to improve the reliability and availability of services, provide effective data services, simplify the architecture of business systems, and allow users to focus more on business systems. One of the many designs of AntDB-M is CheckPoint. The design goal of CheckPoint is to take a snapshot of the data in the database without affecting the business, and that snapshot can be used for quick recovery of the service. The design principles are efficiency and simplicity.

1. Brief description of functions

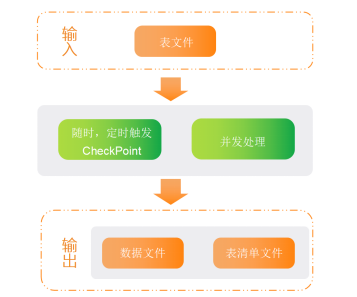

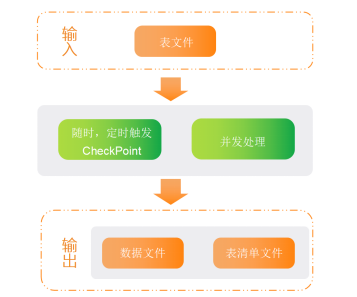

The CheckPoint function of AntDB-M includes trigger at any time and at designated time. One trigger will CheckPoint all tables. CheckPoint does not allow concurrency, and new requests will fail before the former is completed. If there are many tables, concurrent processing can be enabled, and the maximum amount of concurrency is the number of tables. Upon success, two types of files are output in the specified directory: 1) data files, one for each table, and 2) a table list file containing the transaction number that initiated the CheckPoint, and a list of all tables.

CheckPoint files can be used for quick database loading. The table list file can be edited to select the tables that need to be loaded.

Figure 1: Brief description of functions

2. Design implementation

The following section describes how CheckPoint was designed to achieve its design goals and requirements.

2.1 No business impact

CheckPoint cannot block normal access to database services during its execution. This means that the data is always changing during CheckPoint. In order not to block the modification of the data, as well as to export the consistency of the data, CheckPoint state and table caching is introduced here to solve this problem.

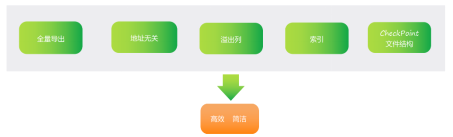

Figure 2: Design implementation - no business impact

2.1.1 CheckPoint state

AntDB-M has three states related to CheckPoint: 1) data export; 2) exported file processing; 3) export completion; The state "1 - Data Export" means that the in-memory data is being exported to a file. This state is very important for exporting data consistency. The following section describes how to guarantee data consistency by referring to this status.

2.1.2 AntDB-M table cache

AntDB-M is divided into two parts in data management: 1) table cache; 2) table data (including table metadata). Normally, all modifications to the data will only modify "2-Table Data". The table cache is only used when AntDB-M performs CheckPoint and is in the "1-Data Export" state.

2.1.3 Export process and data consistency assurance

AntDB-M follows the following steps to export CheckPoint and ensure the consistency of the exported data.

1. Status setting

When performing CheckPoint, first set the CheckPoint status of AntDB-M service to "1 - Data Export". Once this state is entered, AntDB-M will enable special processing of table cache.

2. Data backup of uncommitted transaction

After entering "1-Data Export", before starting to export data, save a copy of the original data of all records related to the current uncommitted transactions to the table cache. This data in the table cache ensures that the data of uncommitted transactions will not be exported, which is a guarantee of data consistency.

3. Table cache modification

Once CheckPoint enters the "1-Data Export" state, all data additions, deletions, and modifications will modify the table cache and table data at the same time. The table cache action is different for different operation types. The operation logic of table data remains unchanged.

l insert

Record the record ID of the newly inserted data in the table cache (the record ID is described later).

l delete

Record the record ID of the deleted data in the table cache, along with the record data.

l update

Record the record ID of the updated data in the table cache, along with the record data. For multiple updates, only the first update goes into the table cache.

2.1.4 Exporting table data to a file

For table data, the full amount will be exported to a file, except for the newly created data blocks during CheckPoint. Since the service doesn’t block waiting, the table data will be updated continuously during this process, and it is not concerned here whether the data in the data block is consistent or not. The consistency of the data will be handled in the subsequent step 5.

2.1.5. Update files by using cache

As you can see from points 2 and 3 above, all changes are recorded in the table cache during the CheckPoint status of "1 - Data Export". After the table data is exported to the file, the file is updated with the records from the table cache, thus ensuring data consistency. That is, the CheckPoint file is a snapshot of the data at the point in time when CheckPoint entered the "1-Data Export" state. Here the update may exist randomly written, but the CheckPoint process is very fast, randomly written data volume is not large, the impact can be basically ignored.

File update rules:

l insert: delete

l update: recover with the original record

l delete: recover with the deleted record

2.2 Efficient and simple

CheckPoint is efficient in two ways: 1) efficient in exporting; and 2) efficient in importing. The following section describes the design to achieve simplicity and efficiency.

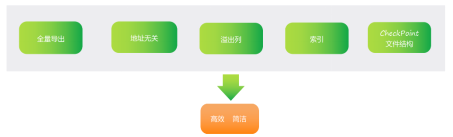

Figure 3: Design implementation - efficient and simple

2.2.1 Full export

AntDB-M's CheckPoint is a full export, which is very different from MySql innodb's checkpoint. Here are a few aspects to introduce why full export is adopted.

l Memory overhead pressure

As an in-memory database, all data is stored in memory, so you don't need to consider too much memory consumption (only for the data itself), so you don't need to consider exporting data to file in real time because the data occupies memory.

l High availability guarantee

As a highly available distributed database, its high availability adopts the multi-copy mechanism. Therefore, high availability can be achieved through multiple services. Exporting data is mainly to reduce the high loading time when the service restarts and the master-slave data synchronization time. Therefore, CheckPoint files are not the main means of high availability. That is, the CheckPoint file export time is not required to be too real-time, and the lower export frequency has little impact on high availability.

l Read/write performance

For data import and export, the factor that affects the efficiency the most is the read and write performance of the disk. For the disk, adopting sequential read and write offers the highest efficiency. Therefore, for data, direct read and write is of the highest efficiency, in stead of format conversion in memory and between files. If you perform incremental synchronization, there are either frequent random reads and writes, or complex conversions and file storage space occupation. Both of them have a great negative impact on the efficiency and complexity of the system.

l Export time

The memory structure of AntDB-M is designed to be very compact and memory address independent. Therefore, data can be exported and imported without conversion. The efficiency is very high, and the time of one export can be controlled within acceptable time.

Combining the above points, it is more efficient and cost effective to adopt full export.

2.2.2 Address-independent

The memory structure of AntDB-M is very compact, which avoids the waste of data space and the amount of exported data without additional management space except for the necessary data storage space. Another efficient design for importing and exporting is address-independent. This avoids a lot of address mapping conversions during import and export.

l Record ID

The record ID is a very important design in the memory structure. All data records have a unique record ID. The memory address of the record can be obtained by simple and efficient modulo and remainder operations on the record ID. This allows the storage of table data to be address-independent, ensuring that no address translation is required for import and export.

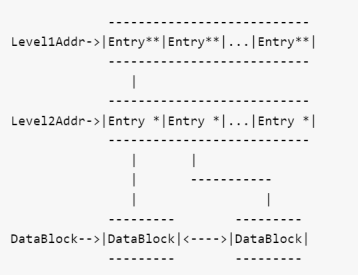

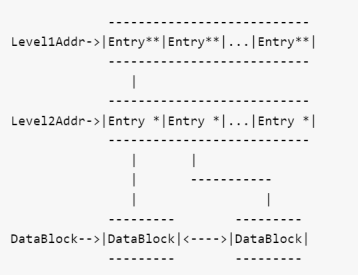

l Multi-level management

Each table in AntDB-M has its own independent tablespace, and each tablespace takes three levels of management. The first and second levels are address spaces that exist only in memory, and the third level is address-independent data blocks. When exporting, it is sufficient to export the data blocks. When importing, the three levels of memory spaces are applied in memory and the relationship between the three levels is established in accordance with the order of the memory blocks, which is a small amount of data and fast.

Since the contents of the data block are address-independent, the entire block is written to the file when exporting, and the data in the file is read directly into the corresponding memory block when importing. This greatly improves the efficiency of exporting and importing.

l Idle record

The management data of the idle address of the data block is also recorded on the data block itself, no additional management unit is needed. All idle records form a bidirectional chain table and only the last idle position needs to be recorded additionally. In addition, an additional 1 byte is reserved for each row of records to identify the current record status.

With the above points, the management of data blocks is compact and concise, and at the same very efficient.

Figure 4: Multi-level management of tablespace

2.2.3 Overflow columns

For variable-length columns, AntDB-M manages them separately as overflow columns, with their own memory space and structure. Only fixed-length columns are stored in the data block, as well as the length and record ID of overflow columns.

The structure design of overflow column is similar to that of data block, which also keeps multi-level and address-independent. Also, to save memory and be efficient, the overflow column has a fixed length per row, which may vary from column to column. An extra record ID is kept for each row, and when the length exceeds 1 row length, record the location where the next row of data is saved.

2.2.4 Index

AntDB-M supports two kinds of indexes: 1) hash; 2) btree; only the index metadata will be exported when CheckPoint exports. The data will be reconstructed in memory.

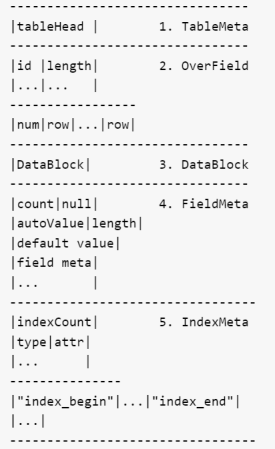

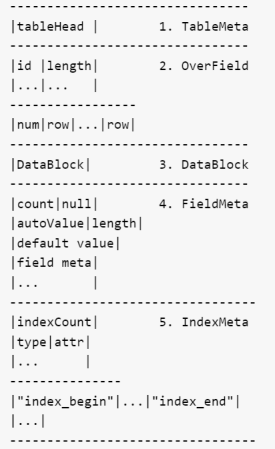

2.2.5 CheckPoint file structure

CheckPoint eventually generates a separate file for each table, which is roughly divided into 5 parts. 1) table metadata; 2) overflow columns; 2) data blocks; 4) column metadata; and 5) indexes;

Figure 5: CheckPoint export file structure

3. Constraints and recommendations

Figure 6: Constraints and recommendations

3.1 DDL constraints

DDL changes are prohibited during CheckPoint because DDL causes changes to table metadata and data. It will greatly affect the memory overhead and the complexity of the system. The frequency of DDL operation is generally low and the time is controllable, and the frequency of CheckPoint is also low and the time is controllable. Therefore, DDL operations can be limited and have little impact on the business system.

3.2 Storage requirements

When importing and exporting, there are very high read and write performance requirements for disks. Therefore higher performance disks are required, preferably SSD disks.

3.3 Stagger export

Assuming a disk write speed of 400M/S, it takes about 256 seconds to export 100G of data. Therefore when deploying multiple services on one host, you can stagger the export to avoid long export time for a single service. Because of the export process, a copy of the data will be put into the table cache to avoid causing memory pressure.

About AntDB

AntDB was established in 2008. On the core system of operators, AntDB provides online services for more than 1 billion users in 24 provinces across the country. With product features such as high performance, elastic expansion and high reliability, AntDB can process one million core communications transactions per second at peak, ensuring the continuous and stable operation of the system for nearly ten years, and is successfully implemented for commercial purpose in communication, finance, transportation, energy, Internet of Things and other industries.

English

English Database

Database Tools

Tools Industries

Industries Scenarios

Scenarios