AntDB’s hyper-convergence and streaming data processing, the organic integration of traditional database and streaming computing model

Ⅰ

Foreword

According to statistics, in today's information age, the amount of information people are exposed to in a day is the amount of information the ancient people could receive in their lifetime. In addition to "more" information in today's society, people's requirements for "efficiency" and "speed" are also getting higher and higher. For example, for many business decision makers, in the current economic situation, they need to do everything possible to reduce costs and increase efficiency. In the past, the habit of looking at business reports every week is now slowly changing to real-time visual analysis of the current business situation.

However, the basic design concept of database as the core carrier of information has not changed much in the past half century. That is, the application extracts the required data results from the database when it thinks it is needed, that is, the "request sent + results returned" model.

This mechanism is directly borrowed by stream processing frameworks such as Apache Storm, Spark Streaming, Flink, etc., by first predefining the processing logic for real-time data, and then executing the processing and determination procedures as each event occurs. It is heavily used in real-time Internet business for real-time pre-processing of the massive events generated ahead.

However, all the capabilities for real-time data processing are built outside the database engine. The database products that are really most closely aligned with the data have not fully exploited their capabilities in the past 20 years. They still use the most traditional way to pre-process records one by one, and need to frequently pull additional data from outside in real time for manual correlation, which is extremely burdensome for development and operation and maintenance.

Therefore, database integrated with streaming data processing capabilities, which can define processing logic and topology for real-time data by SQL + triggers, is a brand new topic raised in the industry in these years.

In recent years, a number of foreign companies have begun to try to significantly reduce the difficulty of development and complexity of operation and maintenance of future real-time applications. In fact, domestic vendors have also gradually integrated streaming data processing capability into their database products based on their technical accumulation and accurate grasp of future application scenarios. AntDB is one of the typical representatives and one of the few databases in China that has taken the lead in developing and possessing the capability of "hyper-convergence + streaming batch".

Ⅱ

Hyper-converged architecture, creating a new era of distributed database

In the last decade, with the rapid development of domestic financial and Internet industries, when talking about domestic database, we must talk about distributed and cloud computing capability. The large scale of data, high query complexity, high correlation and other business needs have led to the gradual development and maturity of distributed database, which has the advantages of smooth expansion, high reliability and low cost; and the development of cloud-based services of database and other basic software is conducive to the reduction of database operation and maintenance costs and flexible scheduling of resources.

In the next decade, "digital transformation" is the expressway of promoting economic society from "quantitative" to "qualitative". The user's demand for database is increasingly refined, and the system architecture that supports multiple businesses from the bottom of the technology will be increasingly favored by the enterprise side. In this context, the convergence capability of multi-engine database begins to appear, HTAP, lake/warehouse integration, stream/batch integration, etc. are the forerunners of this trend, that is, hyper-convergence.

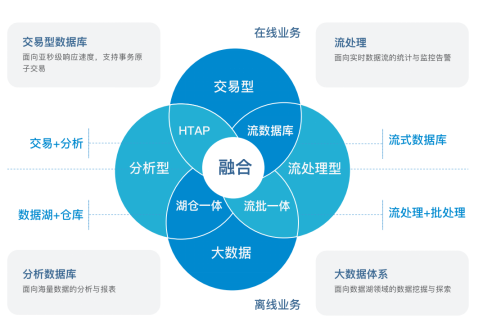

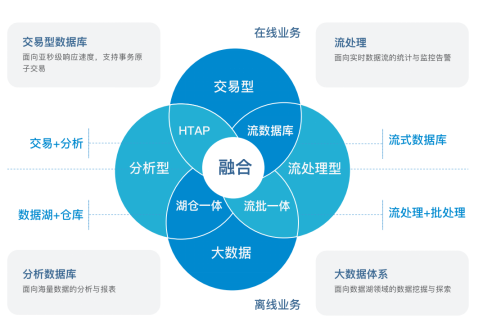

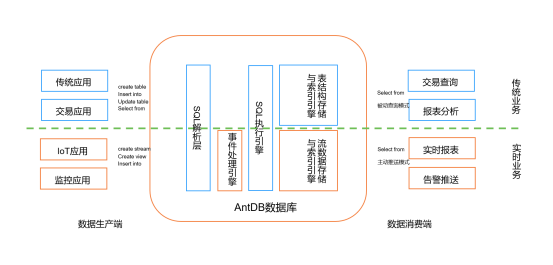

Figure1: Hyper-convergence architecture of AntDB

AsiaInfo proposed a new "hyper-convergence" concept, which integrated multiple engines and capabilities to meet the increasingly complex mixed load scenarios and businesses of mixed data type for enterprises. AntDB's hyper-convergence framework can make full use of the architectural advantages of distributed database engines, and further expand on the concept of HTAP to encapsulate multiple engines such as time-series storage, stream processing execution and vectorized analysis in a unified architecture.

It supports multiple business models in the same database cluster, meets diverse data requirements, greatly reduces the complexity of business systems, and realizes "one-stop data management" under a unified framework.

Ⅲ

Stream processing engine, disrupting a database kernel that hasn't changed in 50 years

The concept of stream processing

On September 11, 2001, the U.S. World Trade Center was attacked, and the U.S. Department of Defense included "active warning" into the macro strategic planning of national defense for the first time. As the world's largest IT company at that time, IBM undertook a large number of tasks for the development of basic support software. The IBM InfoSphere Streams, officially released in 2009, is one of the world's first commercial stream data processing engines in the true sense of the word, by pre-defining the processing logic for real-time data, and then executing the corresponding processing and determination procedures as each event occurs.

This mechanism is directly borrowed by stream processing frameworks such as Apache Storm, Spark Streaming, Flink, etc. It is heavily used in real-time Internet-type business for real-time pre-processing of the massive events generated ahead. Gartner, in its 2022 China Database Management Systems Market Guide, defined it as involving the observation and triggering of "events", often captured at the "edge", including the transfer of processing results to other business phases. It will gain more attention in the next five years.

Figure2 : Gartner's definition of stream/event processing

Difficulties of traditional deployment architectures

However, stream processing frameworks such as Apache Storm, Spark Streaming, and Flink are designed to focus on the "processing" itself. Since they do not have database capabilities, they require complex data extraction when they need to interact with other data for correlation and temporary storage. This requires a large number of developers to write complex Java/C++/Scala code to pre-process records in the most traditional way, and they often need to retrieve additional data from other external cache/database for manual association in real time, which is a great burden to development and operation and maintenance.

As the core carrier of information, the basic design concept of database has not changed much in the past half century, and all the capabilities for real-time data processing are built directly outside the database engine through the application framework. The software products that really fit most closely with the data have not given full play to their capabilities and advantageous position in the past 20 years.

Therefore, the integration of streaming data processing capability into database is a brand new issue raised in the industry in these years. AntDB is the most typical representative, which can define the processing logic and topology for real-time data through SQL+trigger, and is one of the few databases in China that has taken the lead in developing and possessing the capability of "hyper-convergence + stream processing".

During the development of AntDB for more than ten years, we have seen a lot of business scenarios for operators to process core data. Some of these needs can be easily met by traditional technologies, but others must be supported by real-time processing capabilities such as streaming computing.

The organic integration of database and stream processing

Streaming data processing model is very different from the traditional database kernel design. The essence is that the traditional database architecture design is a "request-response" relationship between the application and the database, in which the business initiates a SQL request, and the database then executes the request and returns the result.

The stream processing kernel, on the other hand, is a "subscribe - push" model. Through a predefined data processing model, the business "events" carried by the data are processed, and the processed results are then pushed to the downstream applications for presentation or storage.

Therefore, in the field of real-time processing of streaming data, AntDB has done a lot of innovative exploration and research from scratch, and launched AntDB-S stream processing database engine at the end of 2022, which completely integrates streaming computing with traditional transactional and analytical data storage, allowing users to freely define the structure of data and real-time processing logic through standard SQL in the database engine.

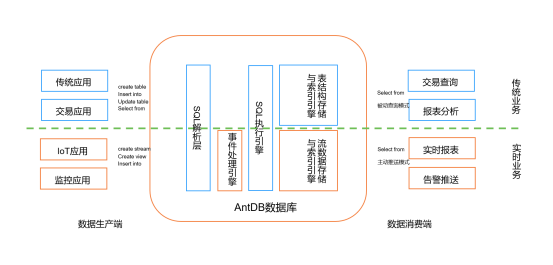

Figure3 : Infrastructure of AntDB stream processing engine

Meanwhile, during the free flow of data between stream objects and table objects inside the database, users can excute performance optimization, data processing, cluster monitoring, and business logic customization of data by establishing indexes, stream-table association, triggers, materialized views, etc. at any time.

Functional advantages

l Technology stack simplification: In the processing of real-time stream events, AntDB stream processing all-in-one engine can process a large amount of real-time data inside the data warehouse, which is further closer to the common transaction.

l Standard SQL definition: The traditional stream processing method is weak for SQL processing, and a lot of business code has to be written, while AntDB-S can process it by unified SQL statements, and the stream is more convenient to use.

l Uniform data interface: It supports the conversion of stream/batch mode, and AntDB unifies the hyper-converged architecture to realize the uniform interface to the outside world, so the data collection and processing do not need to be separated, and the stream/batch can all be done with SQL.

l Support for complete transaction processing: Traditional stream processing does not support data modification, but AntDB-S supports data modification and transaction operation in stream processing.

l More accurate real-time results: through the ACID feature of distributed transactions, it can solve the problems of data disaster tolerance and consistency in real-time stream data processing, and can accurately determine the data failure point and complete the correction calculation and re-statistics of stream events.

Ⅳ

Real-time data platform to quickly realize the whole chain of enterprises in real time

Introducing advanced concepts such as data warehouse, data mining, HTAP, etc., loading huge information volume through real-time data application platform, conducting real-time analysis and processing, and overcoming difficulties in data processing are the focus of core system construction in various enterprises and institutions, Internet, finance, government and other industries nowadays.

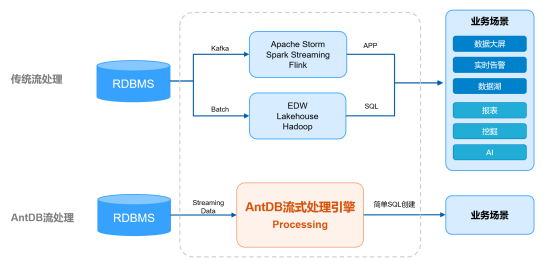

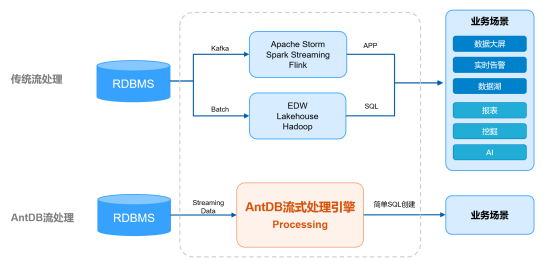

AntDB-S streaming database can be applied to business scenarios such as real-time data warehouse, real-time reports, real-time alarms, asynchronous transactions, etc. Users can create complex business logic of streaming data processing by directly using simple SQL, which can easily replace traditional streaming processing engines such as Apache Storm, Spark Streaming, Flink, etc.

Figure4 : AntDB’s new generation streaming processing engine

For example, for real-time statistical reports, all statistical indicators can be monitored in real time through SQL commands. For real-time alarms, all the alarm records can be pushed to the front-end application by the database at millisecond level without the need for the application to query repeatedly from the alarm table at regular intervals.

Figure5 : Real-time data service platform of charging pile in a province

In the process of replacing the traditional streaming engine, AntDB-S can help users save a lot of development and testing resources, while the security and ACID of the data are fully relied on the underlying AntDB, which can fundamentally guarantee the consistency and security of the data. In addition, all the capabilities supported by AntDB, such as high availability, disaster recovery, multi-tenancy, authentication, distributed mode, and transaction, will be inherited by AntDB-S, which can reduce the development and maintenance cost of streaming business for users by tens of times.

Typical business scenarios

l Real-time marketing: capture the required business information and data in real time, and proactively promote instant data statistics and analysis services to users.

l Risk monitoring and real-time warning: according to the risk monitoring needs of different business systems, we provide respective warning rules, which are applicable to scenarios such as banking, police, transportation and urban security governance.

l Refined marketing: help industry customers to establish marketing database, and standardize and make the marketing process efficient based on the results of data mining and data analysis.

l Data sharing value: eliminating data silos, realizing the availability of multiple invisible data and data value without moving it through real-time data security calculation, creating intelligent, visualized and standardized data sharing and management.

About AntDB

AntDB was established in 2008. On the core system of operators, AntDB provides online services for more than 1 billion users in 24 provinces across the country. With product features such as high performance, elastic expansion and high reliability, AntDB can process one million core communications transactions per second at peak, ensuring the continuous and stable operation of the system for nearly ten years, and is successfully implemented for commercial purpose in communication, finance, transportation, energy, Internet of Things and other industries.

English

English Database

Database Tools

Tools Industries

Industries Scenarios

Scenarios